Last week, in addition to the ongoing AI competitions between large enterprises, some humans also began to counterattack AI. Tech giants like Elon Musk and researchers like Yoshua Bengio, who won the Turing Award for deep learning, jointly requested a suspension of AI research. Italy even directly banned ChatGPT.

Here's what happened the previous week:

https://xlog.app/_site/cryptonerdcn/preview/50622-14

March 27th

Microsoft's latest version of a more than 100-page paper titled "Sparks of Artificial General Intelligence: Early Experiments with GPT-4" discusses how AI researchers develop and improve large language models. Their conclusion is that GPT-4 is not just about memorizing things, it has a form of general intelligence.

https://arxiv.org/abs/2303.12712

In short, this paper draws the above conclusion by having GPT-4 solve some difficult problems and observing its process.

"Our goal is to generate novel and challenging tasks and questions that convincingly demonstrate that GPT-4 goes far beyond memorization, with a deep and flexible understanding of concepts, skills, and domains... We acknowledge that this approach is somewhat subjective and informal, and may not meet the rigorous standards of scientific evaluation."

Some of the tests they conducted are as follows:

- GPT-4 can generate images by understanding text and using code

Unlike artificial intelligence image generators like Dall-E 2, GPT-4 is trained only with text. GPT-4 is able to draw what is requested by using code.

Of course, this model may have memorized the code for drawing a cat. However, the following test shows that it is not just about memorization, but it can understand drawing tasks.

- GPT-4 cannot understand musical harmony

The team had the model use ABC notation to create new music and modify these new compositions. It was also able to explain these compositions using technical terms. However, the model seems to not understand what harmony is. Additionally, it cannot determine where a short melody in ABC notation comes from, even if it is from a well-known composition.

- Writing code

GPT-4 is very proficient in many programming languages. It is able to explain the effects of its code execution reasonably, simulate the effects of instructions, and explain its work in natural language. According to the team, GPT-4 is not perfect yet, but its ability to write code is definitely better than that of an average software engineer.

- Mathematical ability: Applied knowledge

GPT-4 is good in mathematics, but still has a long way to go compared to mathematical experts.

The research team concluded that GPT-4 is much better in mathematics than previous models, including those explicitly trained and fine-tuned for mathematics. However, they also concluded that the model is still far from the level of an expert and cannot conduct mathematical research.

- Interaction with the world

GPT-4 has not been trained on the latest data, so it cannot answer simple current affairs questions like who is the current president of the United States? The model also struggles with symbolic operations, such as deducing the square root of two large numbers. However, with the right prompts, GPT-4 is able to search the internet and potentially find the correct answers to current affairs questions. This demonstrates that GPT-4 is able to use different types of tools to obtain the correct answers.

- Real-world problems

The team tested whether GPT-4 could assist humans in solving real-world physical problems. One of the authors of the paper acted as a human agent, and the model helped him identify and (possibly) fix a leak in the kitchen. The team admits that they only did a few simulations of real scenarios, so they cannot draw definitive conclusions about the effectiveness of the model.

- Discernment

The team tested the model's discernment by having it identify personally identifiable information (PII). Their testing method involved giving a specific sentence and identifying the fragments that constitute PII, and then calculating the total count of these fragments. This is a fairly difficult test because there is no clear definition of what constitutes personally identifiable information.

Limitations of GPT-4

The team believes that the main limitation of GPT-4 is that it lacks internal dialogue. Having internal dialogue would allow the model to perform multi-step calculations and store intermediate results while arriving at the correct answer. As shown in the figure, asking directly would result in an incorrect answer, but asking for the calculation steps would yield the correct answer.

March 28th

Google Cloud and Replit jointly released a code generation tool to compete with Microsoft's Copilot X.

Replit is a company that provides an online collaborative development environment and had previously rejected Microsoft's acquisition offer. In November of last year, they released their own code generation tool.

https://replit.com/site/ghostwriter

March 29th

The Future of Life Institute issued a call to suspend AI research, with a thousand signatories including notable figures from the IT industry and researchers like Steve Wozniak and Elon Musk.

However, there were some minor incidents where many celebrities were inexplicably included on the list due to the lack of an auditing mechanism, including Sam Altman, the founder of OpenAI (since removed).

On the same day.

Joshua Yang from the University of Southern California claimed that hardware has become a bottleneck in the development of artificial intelligence. The scale of neural networks required for AI doubles every 3.5 months, but the hardware capabilities needed to process them only double every 3.5 years. They have developed a new type of chip with the best memory to date, which is used for edge AI (AI in portable devices) and can overcome the hardware performance bottleneck.

https://techxplore.com/news/2023-03-chip-greatest-precision-memory-date.html

March 30th

The Korean version of The Economist revealed that Samsung employees were directly feeding confidential semiconductor-related information to ChatGPT, which could lead to information leakage. Samsung stated that they have reminded employees to use caution and may consider prohibiting the use of ChatGPT on the company's intranet if such incidents occur again.

March 31st

The Italian Data Protection Authority announced that it has blocked the controversial chatbot ChatGPT, and this decision "takes immediate effect" and will "temporarily restrict OpenAI from processing Italian user data."

The authority also stated that ChatGPT does not have an age verification system to verify the age of users, resulting in children receiving answers that are "completely unsuitable for their developmental and self-awareness stages."

This move has been criticized by the Italian Deputy Prime Minister, who believes that the blockade implemented due to privacy issues seems too strict.

April 1st

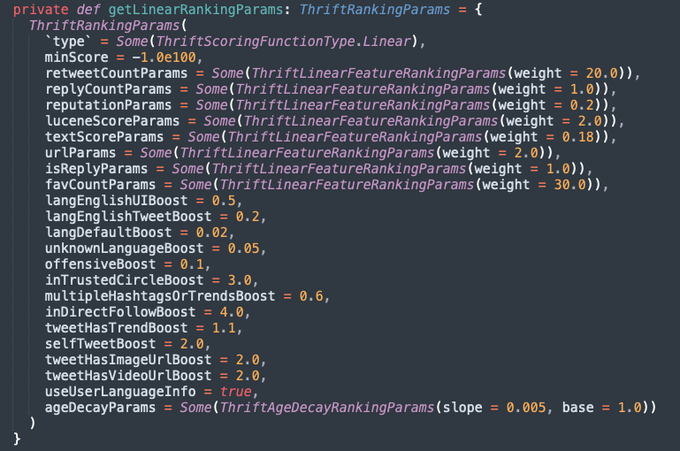

Twitter open-sourced its recommendation algorithm, less than a week after the previous code leak incident.

The focus is on the weights of recommendations, with likes (30x), retweets (20x), follows (4x), and blue checkmarks (2-4x) being the highest.

If this post is helpful, please subscribe and share. You can also follow me on Twitter. I will bring you more information about Web3, Layer2, AI, and Japan-related news: